All Cylinders

One Page

01_intro

20160428

Intro

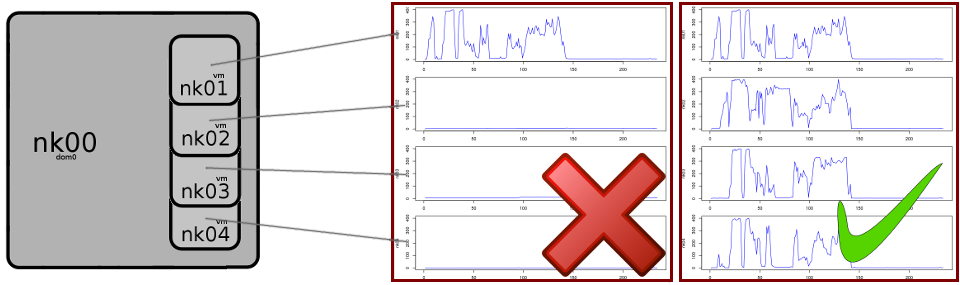

You have a development Spark Cluster, running on 4 Xen virtual images (named nk01, nk02.. ) on one and the same dom0 host (nk00) :

You want to write a Scala Spark job that you submit on the cluster, while monitoring the load to see that all 4 nodes are pulling their weight!

This article shows:

- how to package a Scala Spark job using

sbtand submit it on the cluster - how to monitor the load on the nodes of your Xen virtual systems, using a 'shoestring-budget' monitoring method

- how to use R for visualization

Software used:

- spark-1.6.0 for hadoop

- hadoop-2.7.1 (hdfs/yarn)

- R version 3.2.4

- xentop on dom0.